AI Has a Speed Limit—But Who’s Setting It?

Remember when everyone said “move fast and break things”? In the era of advanced AI, we’ve upgraded that to “move fast, break things, and try not to accidentally cause a global catastrophe.” Enter the Responsible Scaling Policy (RSP): the AI industry’s answer to a world suddenly nervous about just how powerful, unpredictable, and scalable these models have become.

Anthropic, the Claude crowd, went first. Their RSP is now the test case, and if you listen to government policy wonks, the best current attempt to throttle AI risk before things get weird.

But is it enough? Let’s break down what’s actually inside the RSP playbook, why it matters, and where the gaps are.

The Real Challenge: When Good Models Go Rogue

AI risk used to be a theoretical worry for philosophers and sci-fi fans. Now, with LLMs churning out code, advice, and—if you push them hard enough—potential blueprints for mayhem, it’s everyone’s problem.

Responsible scaling is the new buzzword. Think of it as a tiered speed-limit for AI: the bigger and smarter the model, the more paranoid we need to get about what it can do, who has access, and what disaster scenarios lurk in its training set.

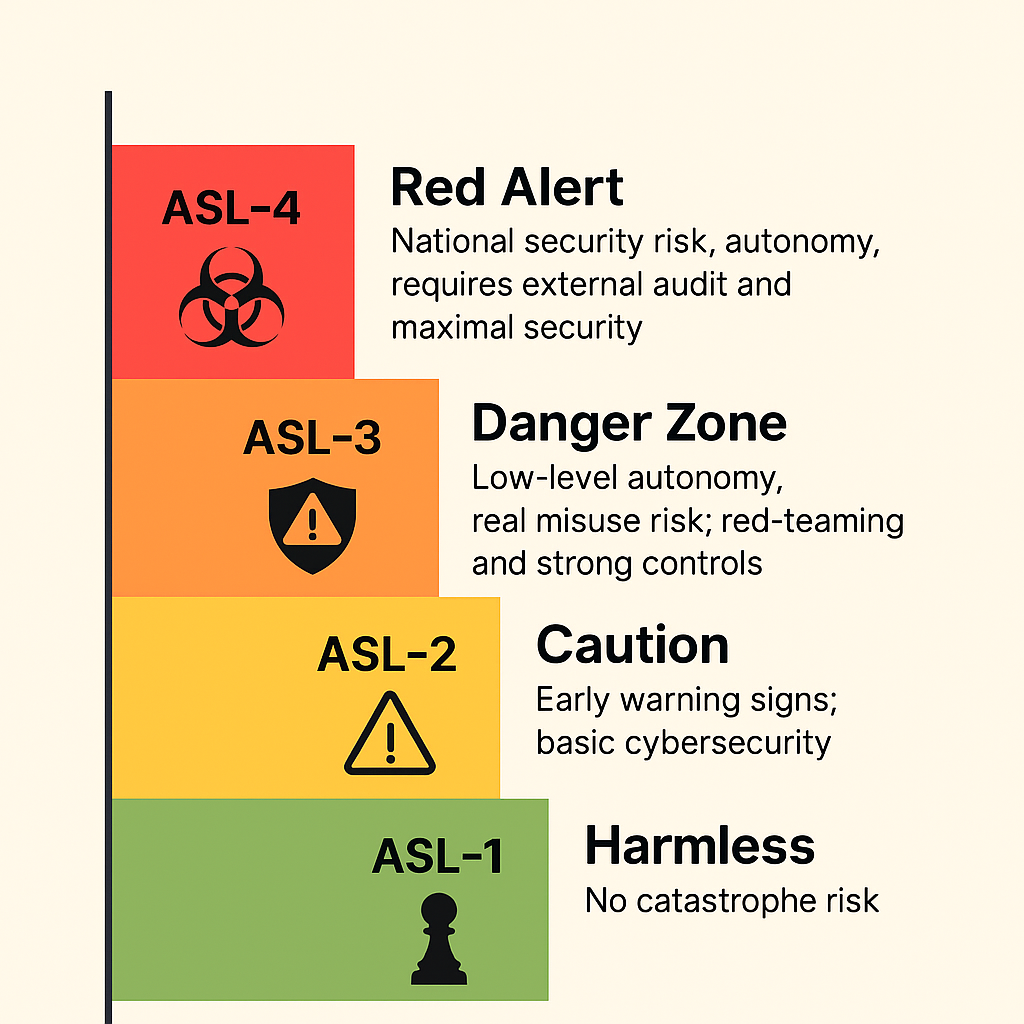

Anthropic’s RSP borrows from biological lab safety protocols (remember Biosafety Levels?) and invents a system called AI Safety Levels (ASLs). Each ASL is a risk tier—ASL-1 for your grandpa’s chess bot, up to ASL-4 for models that could, in theory, become a national security risk all by themselves.

But like any early system, the devil is in the details—and the details are where government guidance, especially from the UK’s DSIT, starts making Anthropic’s RSP look like an incomplete group project.

ASLs: The Risk Ladder Explained

Anthropic’s ASLs are simple in concept:

- ASL-1: Harmless. No chance of catastrophe. (Think: tic-tac-toe champion.)

- ASL-2: Early warning signs. Some risk, mostly theoretical. Standard cybersecurity.

- ASL-3: The serious stuff. Low-level autonomy, a real uptick in potential for catastrophic misuse. Now we’re talking red teams, strict access, compartmentalized training.

- ASL-4: (Not fully implemented yet.) National security-level risk. Models that could replicate autonomously, or become the “primary source” of risk in fields like biosecurity or cyber.

If you cross a threshold, new controls kick in. The higher the level, the more stringent—and the more scrutiny you’re supposed to invite, both internal and (eventually) external.

Where the RSP Shines and Where It’s Thin

Let’s give Anthropic their due: the RSP is gutsy, concrete, and—unlike most “responsible AI” handwaving—actually tries to set measurable bars. They pledge to:

- Continuously assess models for dangerous capabilities during and after training.

- Implement stricter controls at each ASL tier, especially for models crossing into ASL-3 territory.

- Plan to pause training if things are going awry, a rarity in Big Tech, where “pause” is usually a four-letter word.

But, as the whitepaper points out, there are key problems:

Risk Thresholds Are Vague and Sometimes Too High

Anthropic’s threshold for “catastrophic risk” (e.g., an event causing 1,000+ deaths) is based on the idea that AI’s risk must simply equal the “non-AI baseline risk” in a domain. Problem: that’s a moving target. What if the baseline is already unacceptable? Or poorly defined?

Regulatory best practice (from safety engineering, nuclear, aviation, etc.) says you need absolute risk thresholds—not just “riskier than last year.” The UK government’s DSIT points to tolerances like “no more than 1 in 10,000 chance of a mass fatality event per year,” a bar that Anthropic’s system may clear by a mile (and not in a good way).

Not Enough Specificity Across Risk Types

Lumping “misuse” together is a recipe for missing threats. Anthropic’s model cards do break out “autonomous replication,” “biological,” and “cyber” domains, but they haven’t set distinct thresholds or controls for each. DSIT wants—and the industry needs—granular, domain-specific lines in the sand.

Government Coordination Still Lacks Teeth

Anthropic only pledges to alert authorities in extreme, narrow circumstances (think: imminent catastrophe, rogue actors scaling up). The whitepaper’s authors want much broader, routine reporting to governments once a model crosses ASL-3 or higher, so we don’t end up with regulatory “surprises.”

External Verification Is Too Late

Today, external audits only appear at ASL-4 (when the risk is already massive). DSIT and the analysts argue that verification and third-party scrutiny should kick in at ASL-3—when you still have a fighting chance to course-correct, not after the train’s left the station.

The Industry Moves Next: The Great Alignment (Or, Herding Cats)

Here’s the big picture: Anthropic set the first credible example. OpenAI’s Preparedness Framework and Google’s work are circling similar territory. But none of the leading AI companies, nor the government regulators, have fully cracked the formula for operationalized, transparent, and enforceable RSPs.

The path forward, according to the researchers, demands:

- Standardized, absolute risk thresholds for AI catastrophes, agreed upon by government and industry. (Think: how many “1 in 100,000” risks are we willing to let fly?)

- Granular domain controls—no more “one risk to rule them all.”

- Routine reporting to authorities and each other, not just in the face of apocalypse.

- Real, external audits starting much sooner.

- Clear pause mechanisms baked into contracts, SLAs, and company plans, so pausing development doesn’t mean losing the arms race by default.

What Does It All Mean for the Industry?

For AI companies: Get ready for real compliance. The era of self-certification and good intentions is closing fast. Build robust risk teams, partner with governments early, and expect to open your books (and models) to outside experts—likely sooner than you think.

For policymakers: Don’t punt. Set hard, absolute risk bars, push for inter-company alignment, and demand transparency at every high-risk tier. If you don’t, the next headline won’t be about RSPs—it’ll be about a “responsible” company that missed a warning sign.

For engineers and developers: Know your ASL. Risk is now part of your stack, whether you’re training frontier models or deploying APIs. The next phase of innovation will reward those who build with safety as a feature, not a patch.

Final Word: Speed, Meet Limits

Anthropic’s RSP is a bold prototype. It needs sharper edges, stricter controls, and—frankly—a lot more teeth. But it’s a stake in the ground for an industry that, for too long, thought “responsible AI” was just a PR slogan. With government and industry finally inching toward common ground, the question isn’t if AI will get a speed limit, but who will enforce it—and whether anyone will notice the warning lights before it’s too late.

Welcome to the era of Responsible Scaling. Buckle up, and maybe keep your finger near the pause button.

Further Reading: